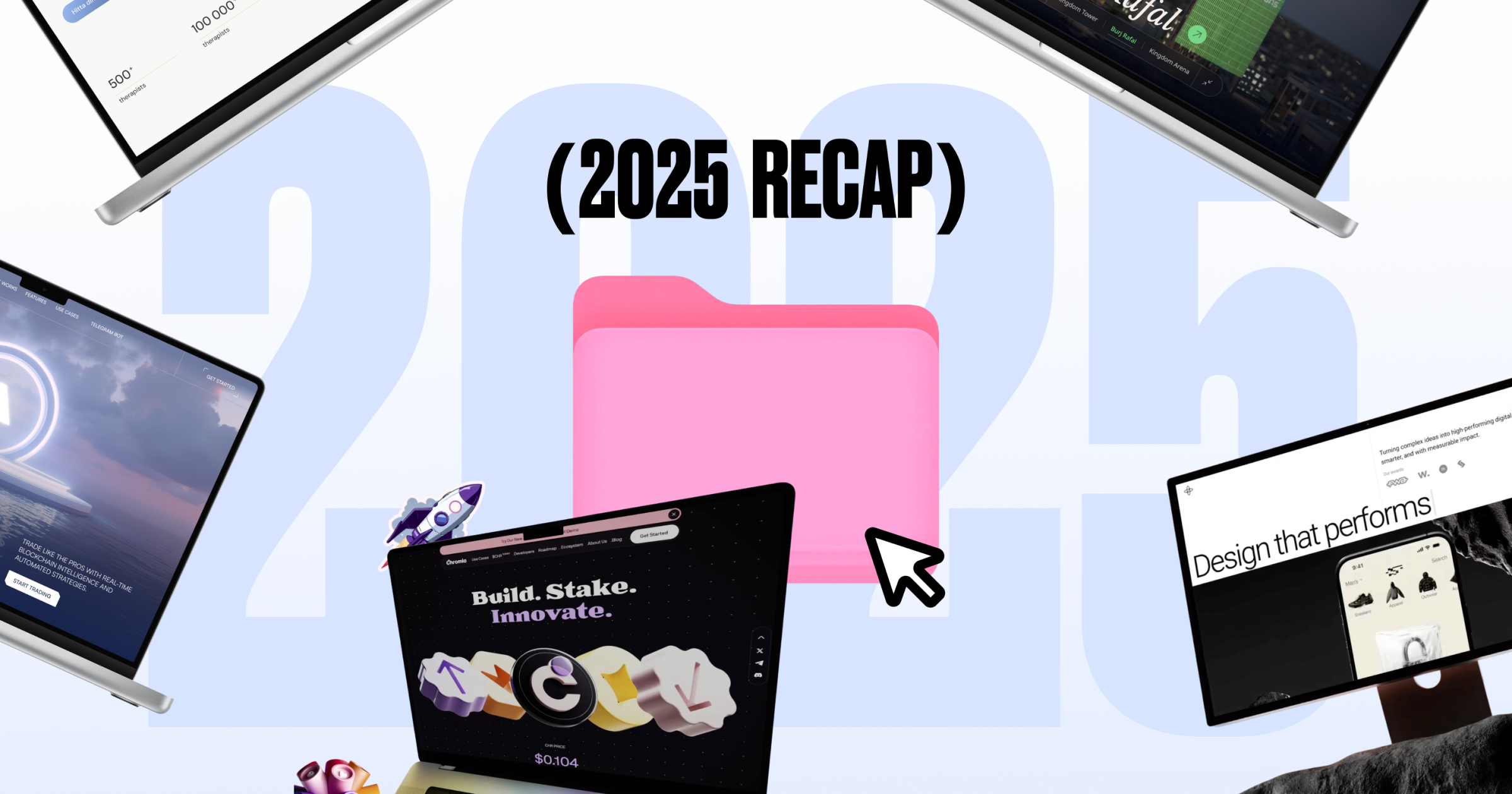

Recently, AI was a new shiny thing — today it’s how our designers solve creative challenges every day. We, the Sigma Software Design Team, collected stories from our professionals who’ve become AI-fluent and are eager to share what they’ve learned. From UI specialists using AI for user research to motion designers leveraging such tools for complex animation and graphic artists generating outstanding visuals from a simple prompt. So, here’s what they told us about the reality of working with artificial intelligence.

I believe that most designers today have already integrated AI into their working process. While there’s a popular opinion that AI will take our jobs, let’s be real — it still doesn’t have a good eye for understanding what makes a design look great and what doesn’t. That’s why I see AI as an instrument, not a replacement for professionals.

I usually apply AI early in the discovery stage. It helps me dive deeper into industry, business goals, and users’ needs. As designers, we all get overloaded with tons of information about domain specifics, competitor research, and user feedback. Even with all the documentation well-shaped, it’s much faster and easier to analyze everything with AI.

However, at this stage, AI has its limits. Competitor analysis, for example, is not the strongest side of AI, as even the latest models tend to suggest outdated or irrelevant data about business competitors. So, it’s better to use your discernment and double-check everything.

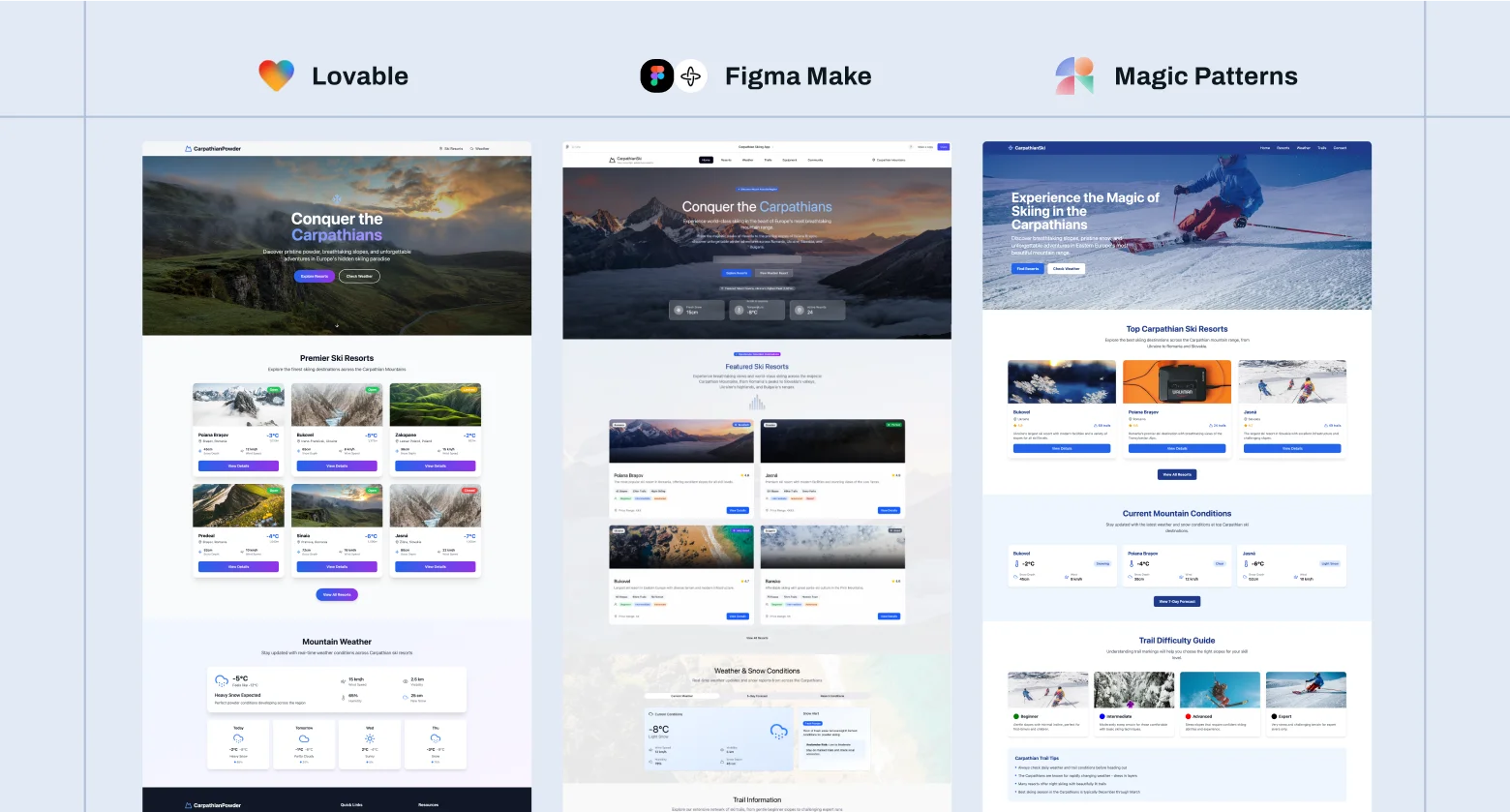

I’ve experimented with lots of tools when it comes to creating ready-to-use UX/UI solutions. Lovable, Figma Make, and Magic Patterns proved to be the most effective for me. For example, here’s the result of my prompt to create a website for mountain-lovers:

As you can see, these designs look pretty generic. There’s always a risk that if we rely heavily on AI for UI/UX, everything will start looking similar. The unique, human touch could disappear, leaving us with standardized templates instead of creative solutions.

On top of that, getting a design that’s complex enough not to look like a template often takes a lot of work and many iterations. The results from AI prompts can be unpredictable, so it takes time before you get a solid result.

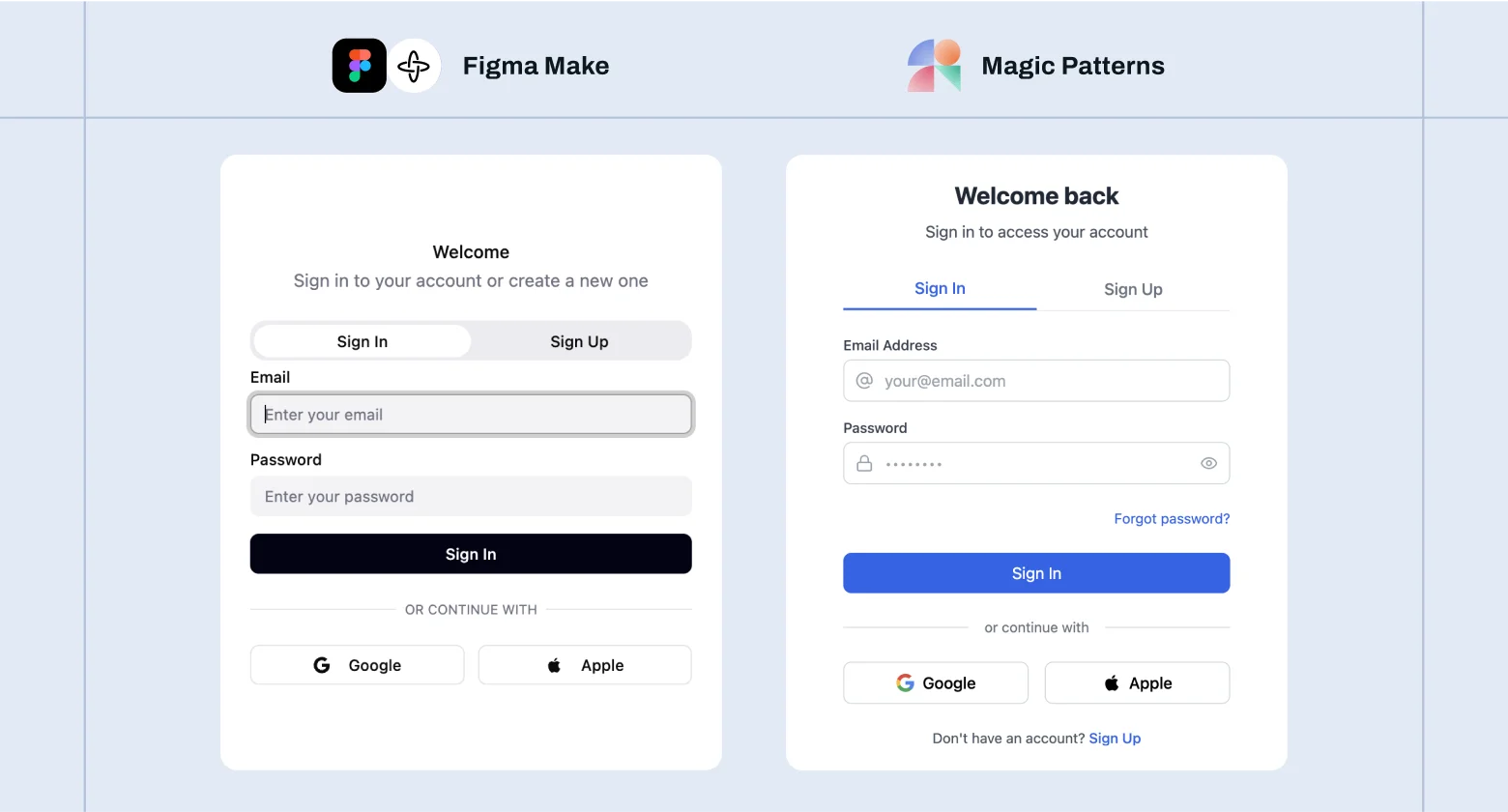

That said, AI can handle small UI components quite well. Toggles, sign-in forms, and basic state transitions are easy to generate and often usable out of the box. Figma is planning to improve in this direction, but for now, complex or dynamic components are still far from high-quality AI output.

Also, AI tools can be used for quick prototyping, idea validation, etc.

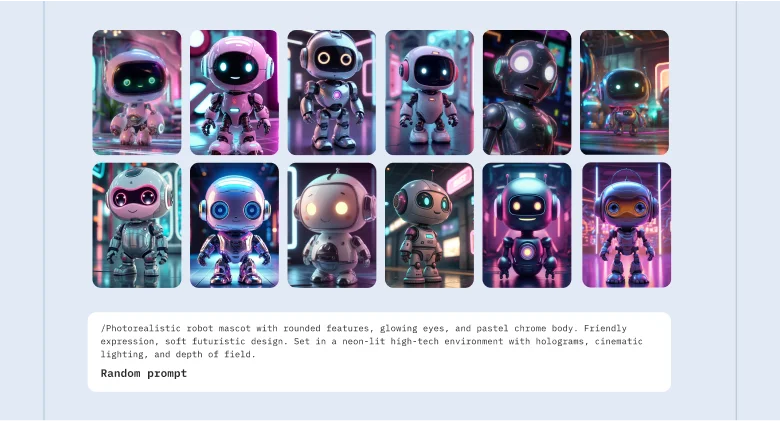

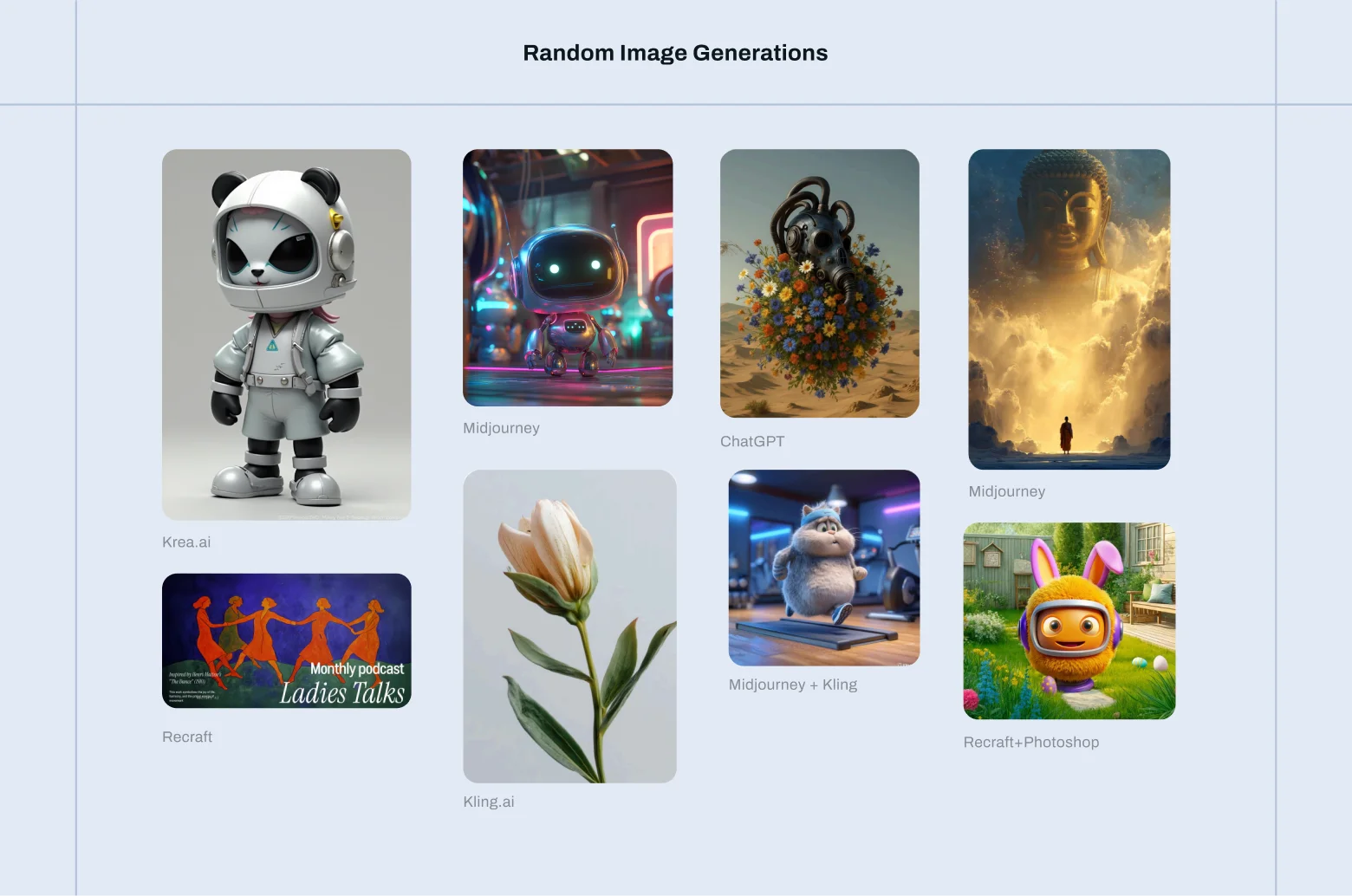

A lot of narrow-profile tools can be helpful at the concept creation stage. Of course, simple non-specific prompts usually result in very basic and standard visuals across different models. So again, combining human touch with AI capabilities became essential to receive impressive outcomes. As there is always a designer behind any creative idea, here comes the art of prompting:

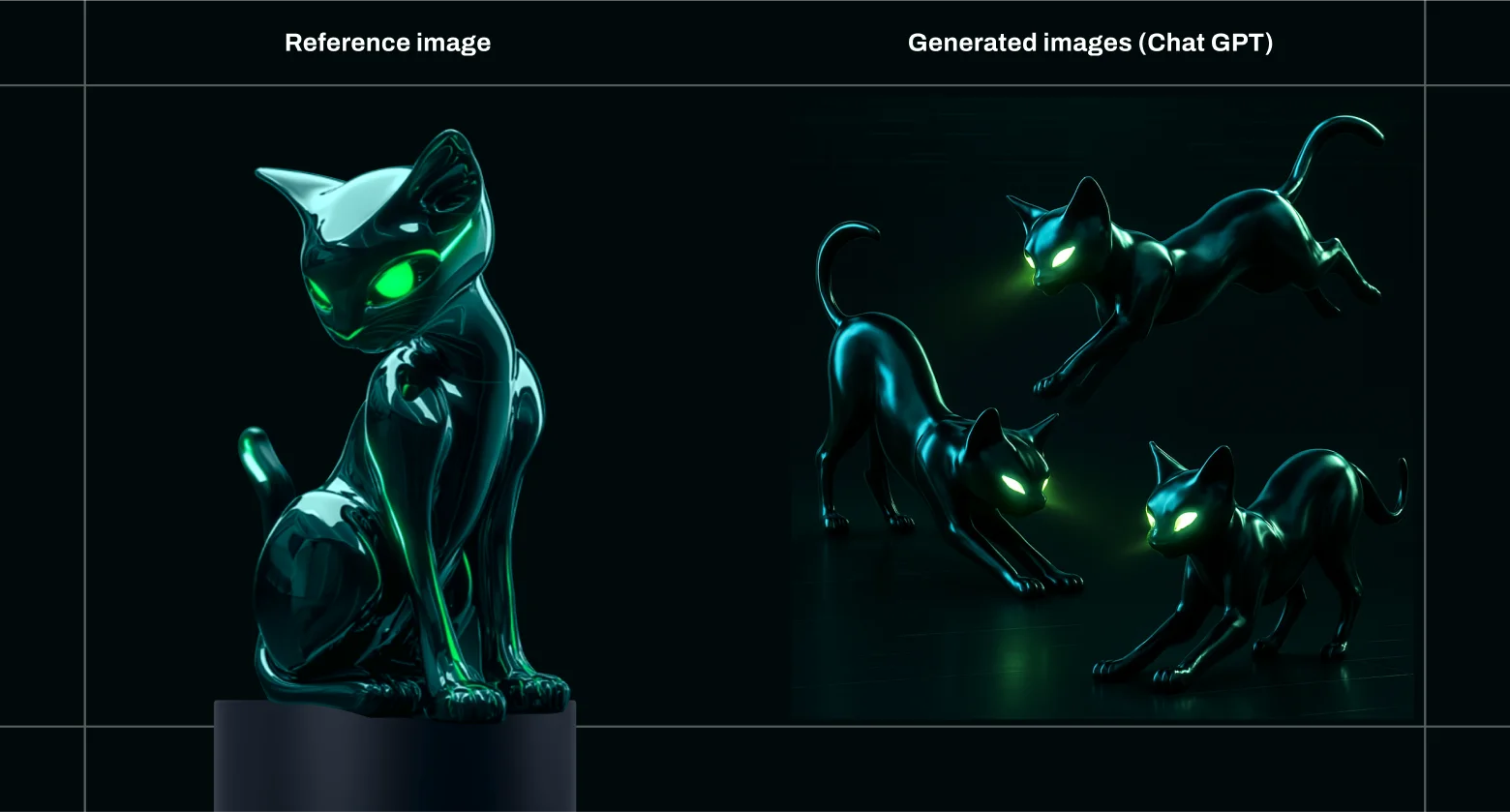

As for me, I prefer ChatGPT and Krea.ai for such tasks. The last one is very useful due to its Inpainting tool that allows changing particular assets on your visual. For example, here you can see how I managed to dress up my mascot using visuals of a jacket and helmet. The biggest problem in using AI for concepts and mascots is consistency. Yet, I think that the latest ChatGPT-5 version works pretty good with such requests.

Finally, many of today’s platforms intend to provide designers with an all-purpose tool that unifies different LLMs that work with texts, images, and videos at the same time. For example, Weavy works as an aggregator of several LLMs and allows generating both images and videos. Therefore, I believe that the future of AI tools lies in this universal approach rather than using a different tool for a separate task.

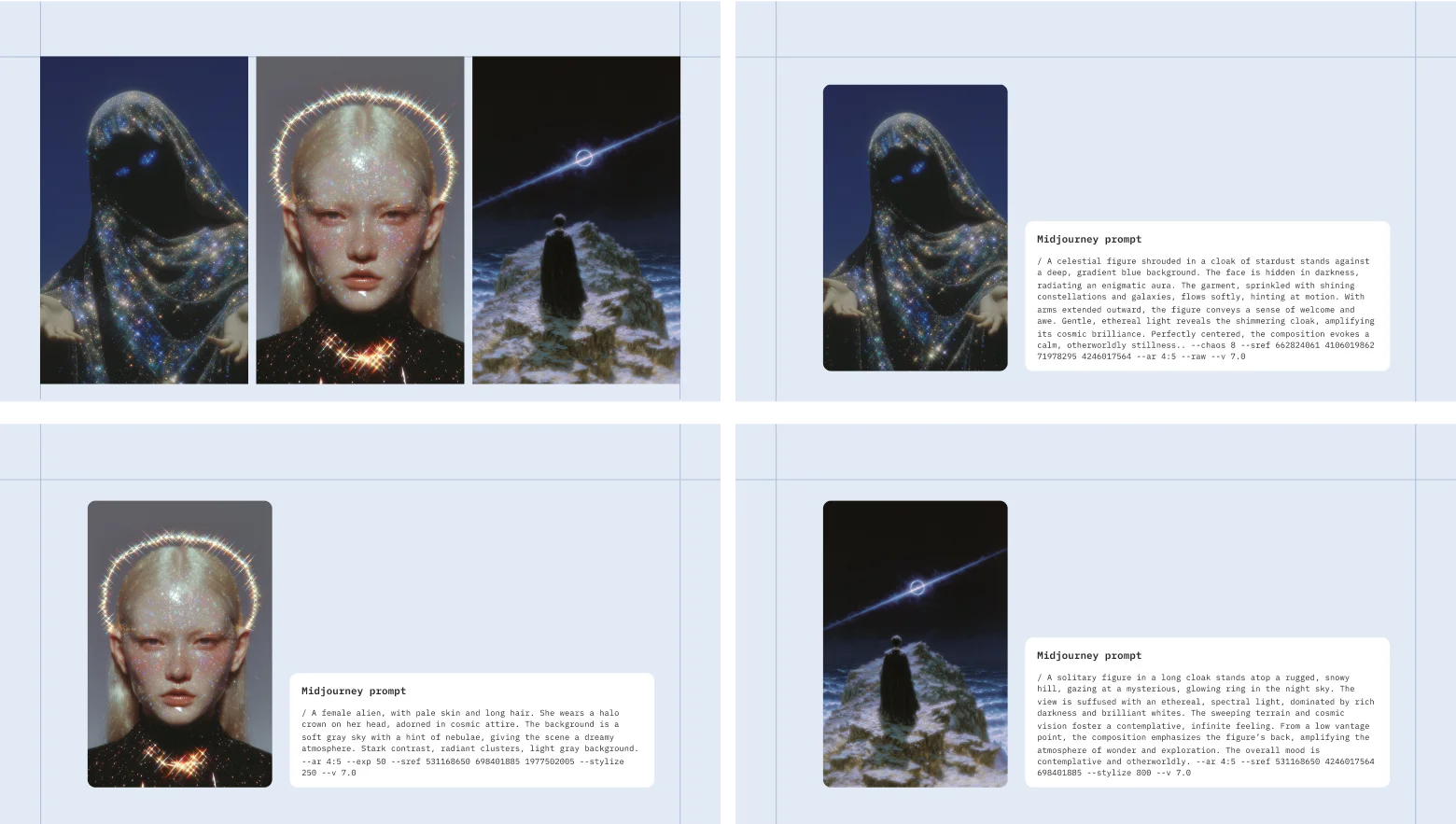

Today, using AI is all about trying new tools and exploring the results they provide. In my work, I usually use two platforms. The first one is well-known and highly popular — Midjourney. I use it almost every day. This is a disruptor in my motion design workflow, as it allows animating visuals in just one click and easily creating 3D models. However, it is worth noting that the models can’t be edited. Instead, you can generate and regenerate light, perspective, and textures. For this, I usually combine Adobe After Effects and AI tools. It helps me receive fantastic results:

%E2%80%AF%E2%80%AF.webp)

It took a huge number of AI-generated images to create this video with the matching style. The biggest challenge with AI is that it still struggles with consistency. Thus, I started with simple prompts, then added more and more details and used visual references to communicate with the machine properly. It’s also helpful to use the ‘Search by Image’ feature in Midjourney and start working with some existing images. For example, I search for “tablet”, select the picture that fits my needs best, and work on it using various prompts. Many designers generate prompts using AI, but I formulate them by myself. The results turn out to be much better than those from prompts generated by ChatGPT.

The second tool I use — Comfy UI — is less popular and far more complex than Midjourney. However, it provides almost limitless possibilities. At first sight, Comfy UI looks scary, since it has a node-based interface. Yet when you learn how to use it, you are ready to generate almost anything.

%E2%80%AF%E2%80%AF.webp)

Comfy UI is a local program, so you should install it on your computer. It’s one of the best AI tools for creating consistent visuals, thanks to a model that can analyze a character, prepare its “skeleton,” and then generate it in different poses. The only thing you need to do is to upload as many images as possible of your character or object. Note that the app is a resource hog and requires a powerful graphics card and a lot of operational memory. Plus, you’ll have to put in some time to learn how to use the tool.

As a graphic designer, my work often goes beyond just creating graphics. I take over tasks involving video, audio, and music, so

I use a whole suite of different AI tools to handle those more efficiently.

Below you can find a table with the rating of tools I apply daily:

Additionally, I would also recommend using Fontjoy or Khroma tools to work on font and color pairings. When it comes to scripting, realistic image cloning, or video editing — Veed, DeepAI, and Higgsfield could be of help.

Working with different AI tools may sometimes be overvaluing. So, before you dive in, let me share a few tips that made my AI journey smooth, and which may help you enhance your AI experience:

- Master your prompting skills with ChatGPT and DALL·E

Learning how to communicate with machines makes all the difference in getting the results you want. Don’t be afraid to experiment with different approaches until you find one that works best for you. - Mix and match tools for even better outcomes

I often combine Recraft and Photoshop. Recraft helps me modify illustrations and add elements, while Photoshop gives me the control I need for final touches. - Use paid versions of the tools for commercial work.

Free versions can be risky for business use due to copyright issues. There have been high-profile lawsuits, like Disney and Marvel going after Midjourney for copying their animation styles. Always read the Terms & Conditions of such AI tools — some platforms like Kling don’t give you exclusive rights to your generated content, while others like Runway (with a paid subscription) let you keep full ownership as long as your prompts are original.

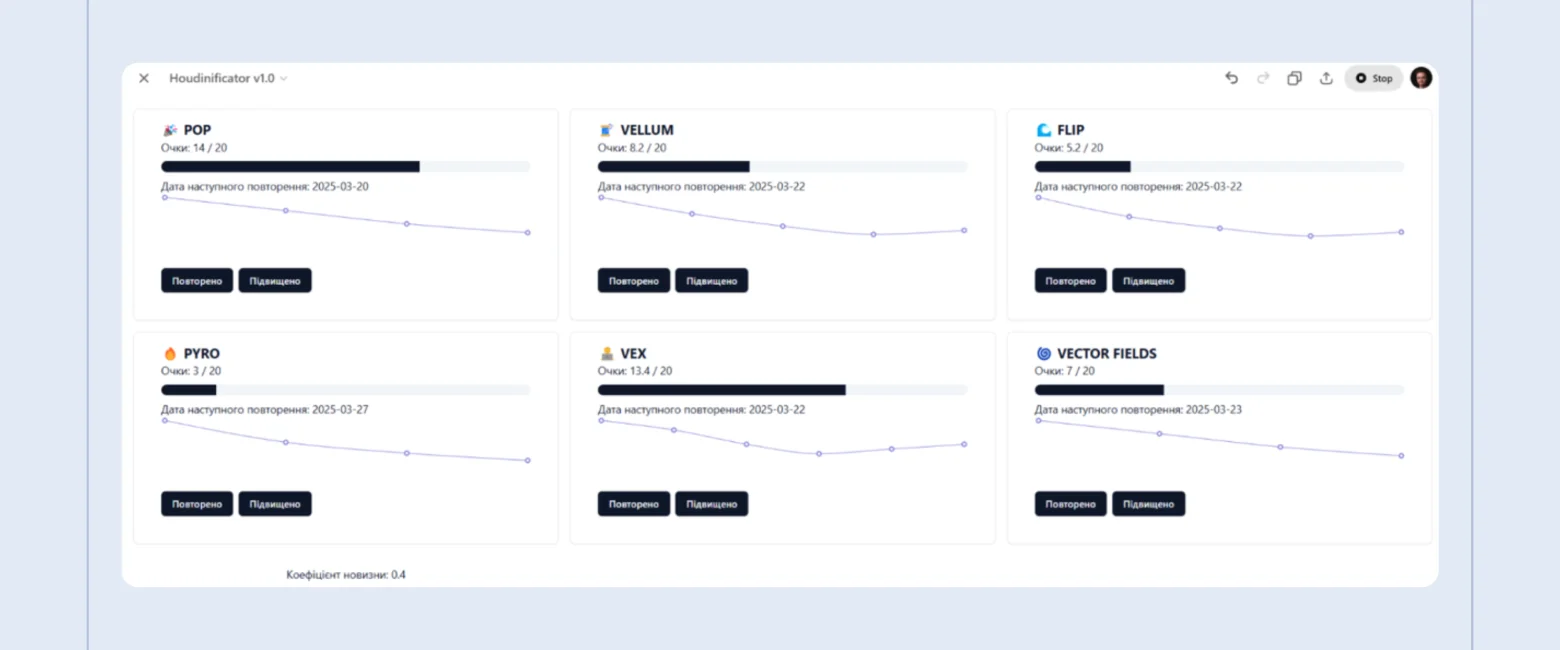

AI tools serve a double purpose for me, they not only help with creating designs, but also with learning new stuff. As a motion and 3D designer, I often use complex software, such as Houdini. Sometimes it’s difficult to recall some specific functions, like how to recreate liquid in 3D. A typical scenario is when you’ve created it once and then forget everything by the time you need to use it again (and usually asap). I didn’t want this to slow my design process down, so I needed to find a smart solution.

And I actually found it. I decided to use a Spaced Repetition approach. It is based on repeating what you’ve learned at increasing intervals over time. It works like this: if you get it right, you can wait longer before trying again. If you get it wrong, you go back to practicing it more often. Besides, the approach is applied in a well-known Duolingo app.

Hence, instead of rewatching tutorials, I have created a so-called Houdinificator — a ChatGPT learning platform that allows me to master various Houdini frameworks by passing AI-generated tasks. After I pass the tasks, ChatGPT provides feedback and suggests improvements. I’ve also added various parameters to this learning tool, such as progress tracking, repetition setting, etc. So, it’s also based on some gamification of learning that motivates me to keep repeating the tasks. You can play around with it and set it up as you’d like. Here how my version looks like:

Key Takeaways:

Whether you need to discover business specifics, prototype, create concepts, generate videos, or solve complex design problems — AI becomes your creative partner, helping you work faster and embody creative ideas freely. What’s the catch? AI tools are powerful, but they’re still just instruments. What makes the magic happen is the designers using them, their creative vision, aesthetic sense, and ability to think critically to shape meaningful and high-quality results.

AI is getting smarter every day, and the pace of innovation shows no signs of slowing down. We’re excited to experiment and discover ways to integrate them into our creative process. Hence, expect updates on the newest tools we’re testing and how they help us to deliver even better results for our clients and projects. The future of design is right now, and we can’t wait to see where it takes us next. So, stay tuned!

%202.webp)

.webp)